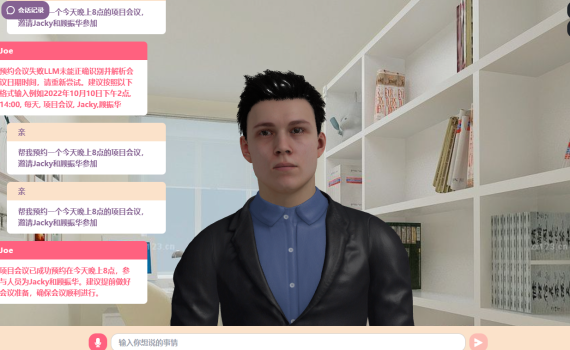

langchain的tools经常会说:“提供的日程查询工具帮助较小,我将直接回答。” 问题现象 我加了好几个tools,希望可以在langchain的agent框架下实现一些自定义的功能,但是在实际运行过程中langchain的tools经常会说:“提供的日程查询工具帮助较小,我将直接回答。” 结论 问题分析定位的过程又臭又长,为省流直接上结论:修改调整你的prompt,以适配LLM(不同的LLM的结果不一样,如果想产品化你需要盯着一个LLM引擎自行调整prompt来适配)。 问题分析 原始请求: {‘method’: ‘post’, ‘url’: ‘/chat/completions’, ‘files’: None, ‘json_data’:{‘messages’:[{‘role’: ‘user’, ‘content’: ‘Answer the following questions as best you can.You have access to the following tools:\n\npython_executor: 此工具用于执行传入的 Python 代码片段,并返回执行结果\nScheduleAdder: 用于设置日程,使用的时候需要接受3个参数,第1个参数是时间,第2个参数是循环规则(如:\’1000100\’代表星期一和星期五循环,\’0000000\’代表不循环),第3个参数代表要执行的事项,如:(\’15:15\’, \’0000001\’, \’提醒主人叫咖啡\’)\nweather: 此工具用于获取天气预报信息,需传入英文的城市名,参数格式:Guangzhou\nCheckSensor: 此工具用于查询会议室内各种IoT设备在线状态、传感器数据、设备开关状态\nSwitch: 此工具用于控制空调、投影、窗帘、灯、终端、暖气、投屏器的开关,参数格式:(“空调”,”on”),返回True为成功\nKnowledge: 此工具用于查询XX视讯视频会议系统的专业知识,使用时请传入相关问题作为参数,例如:“XXX硬终端支持H.265+RTC的会议吗”\nScheduleDBQuery: 用于查询所有日程,返回的数据里包含3个参数:时间、循环规则(如:\’1000100\’代表星期一和星期五循环,\’0000000\’代表不循环)、执行的事项\nScheduleDBDelete: 用于删除某一个日程,接受任务id作为参数,如:2\nGetSwitchLog: 此工具用于查询会议室内的IoT设备开关当天的操作历史记录\ngetOnRunLinkage: 此工具用于查询会议室内的IoT设备当前在运行的联动,以及温度、湿度、烟感等的环境监控\nWebPageRetriever: 专门用于通过Bing搜索API快速检索和获取与特定查询词条相关的网页信息。使用 时请传入需要查询的关键词作为参数。\nWebPageScraper: 此工具用于获取网页内容,使用时请传入需要查询的网页地址作为参数,如:https://www.baidu.com/。\nKnowledgeBaseResponder: 此工具用于连接本地知识库获取问题答案,使用时请传入相关问题作为参数 ,例如:“草莓最适合的生长温度”\nMeetingScheduler: 用于预约会议,使用的时候需要接受4个参数,第1个参数是时间,第2个参数是循环规则(如:\’1000100\’代表星期一和星期五循环,\’0000000\’代表不循环),第3个参数代表要执行的事项,第4个参数代表需要参 […]