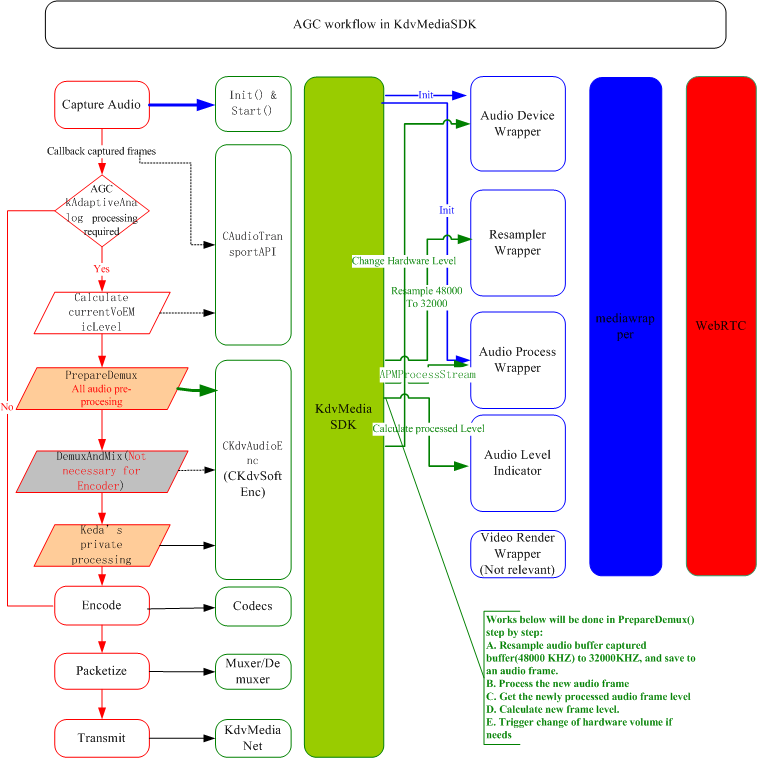

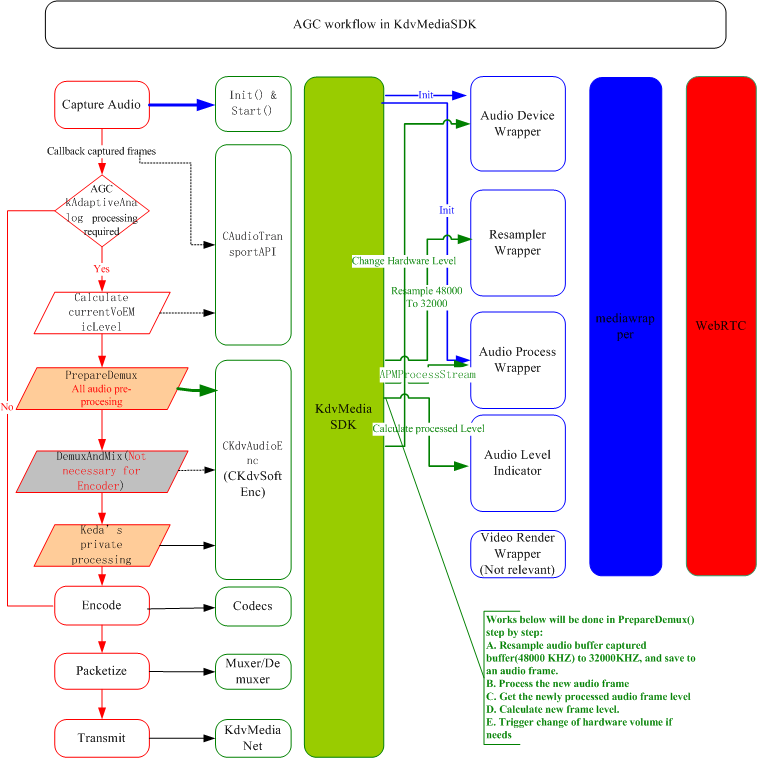

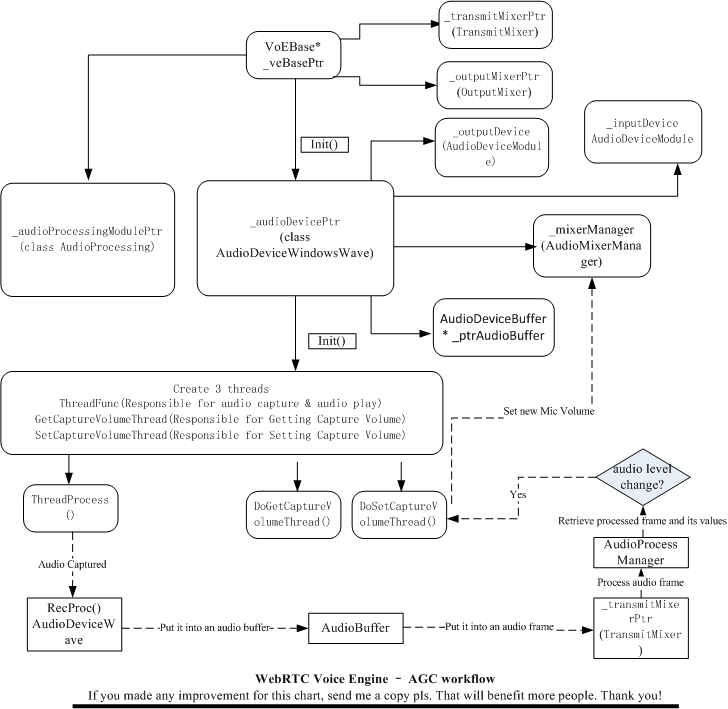

My current job responsiblity is researching on WebRTC, and the first task is wrapping a class from WebRTC to process audio frames to implement functions of audio AEC, AGC, NS, High pass filter etc. Information list below is from WebRTC.org, you can also view it by visiting http://www.webrtc.org, or it’s […]

2

2  13

13